How To Get Started With Technical SEO

How To Get Started With Technical SEO

What is technical SEO?

Technical SEO refers to server and website optimizations that aid search engines in crawling and indexing your website. Keyword stuffing, just having a good site architecture, and including images on your webpages doesn’t make the cut anymore. Common technical SEO practices include redirecting bad or outdated links, optimizing for page speed, mobile responsiveness, schema, and error management.

Schema

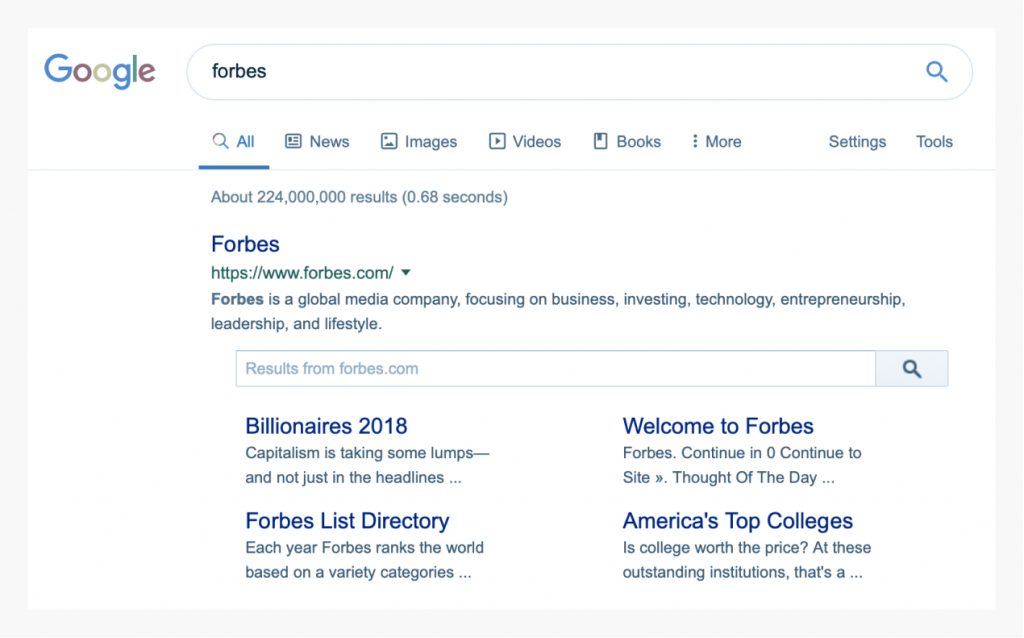

Schema, an added layer of code inserted into your website’s HTML, improves how search engines crawl your website and later present it in the Search Engine Results Page (SERP). In short, it adds additional contextual mark up to your webpages, which helps give crawlers more detail about different pieces of relevant information presented on a given page.

Schema optimizations also support the user experience by connecting additional pages with deeper context. Depending on the SERP, Schema will make connections between content and provide deep links relevant to the search. Giving users more links to your site will quickly guide them to their answer and in turn improve overall user experience.

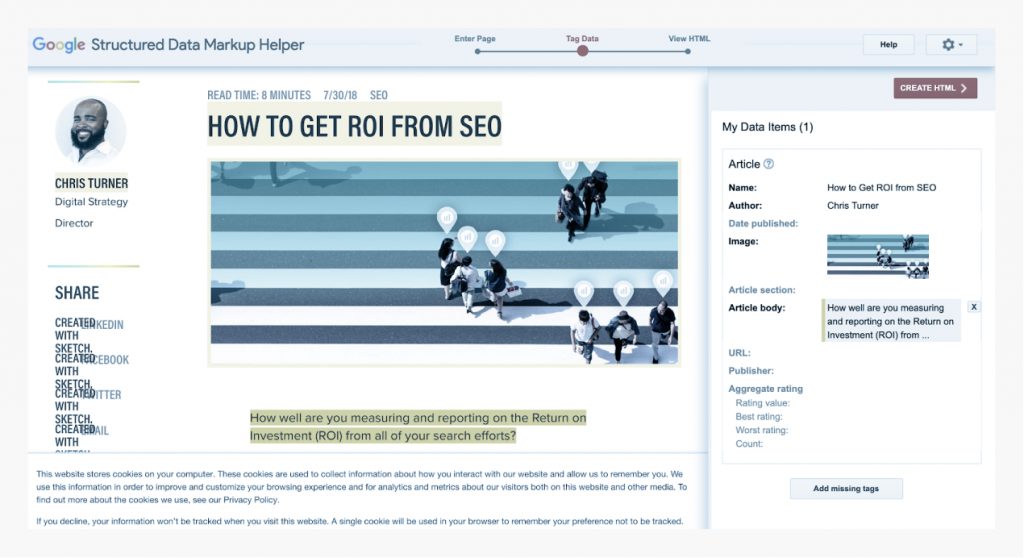

One way to implement Schema into your website is to head to Google’s Structured Data Markup Helper. You can then select a data type (articles, events, restaurants, datasets, etc.) and past HTML or a URL. Then, select “Start Tagging” to enter data items.

Let’s say you entered the URL of one of your blog articles. The next step is to highlight items in the article to populate the Data Items list. If there are items on the webpage that you want to tag, but that aren’t shown as ready options, there’s an “Add missing tags” feature on the lower right-hand side of the program. Once you’ve declared all of your data items, select “CREATE HTML” to access the code you’ll need to put the data in action.

You’ll see a dropdown menu above your markup. If you select it from JSON-LD to Mictodata, the program will highlight the lines of code you need to enter into your website’s HTML. If you want to preview your work or check for errors, Google has a Structured Data Testing Tool linked in the button labeled “Finish”.

Robots.txt

Robots.txt is a file that holds directives for how search engine crawlers should scan your website. It’s best to give crawlers access to your entire website and to reference sitemaps. Make sure to reference a sitemap for videos and a sitemap for all content files. If the need arises–perhaps the website is still in the developmental process–the robots.txt file will guide crawlers away from unwanted areas.

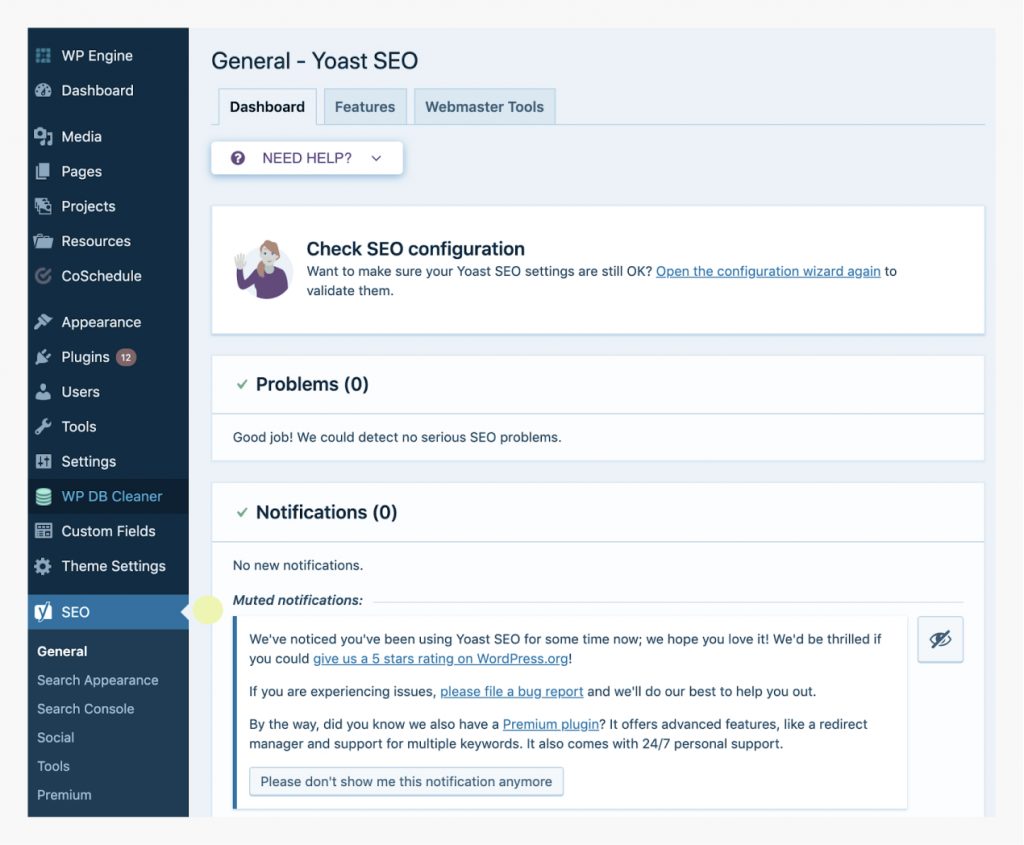

If you maintain your website with a toll like WordPress, Yoast (an SEO plugin) is a useful way to construct a sitemape.xml file with indices for different webpage categories and to then call out that sitemap.

Select “File editor” under Tools in the Yoast dropdown menu. Then, simply create and edit the content it generates. If you’re set on building your own robots.txt file, or want to familiarize yourself with how it’s constructed and formatted, here’s an example:

User-agent: * (this symbol is signaling all bots and isn’t often changed)

Allow: /

Sitemap: https://www.mywebsite.com/GoogleSitemap.xml

Sitemap: https://www.mywebsite.com/VideoSitemap.xml

To make your own, create an empty file in a text editor named “robots.txt” and upload it to your server. You should then be able to edit the file from the plugin. “Allow:” followed by a “/” tells search engines to crawl anything that’s not explicitly blocked. If you want to block webpages, then insert a “Disallow:” followed by the /slug/ of the URL. To find and call out your website’s sitemaps, select “Features” under General in the Yoast dropdown menu. Then, press the question mark next to XML sitemaps and select “See the XML sitemap”. Finally, make sure to save your changes before exiting the plugin.

User-agent: *

Allow: /

Disallow: /slug/

Disallow: /slug/

Disallow: /slug/

Sitemap: https://www.mywebsite.com/GoogleSitemap.xml

Sitemap: https://www.mywebsite.com/VideoSitemap.xml

It should be noted that, as of September 2019, Google and many crawlers that are up to date on standards, will no longer follow the ‘noindex’ directive. This directive requested that the crawler and the index it feeds does not include the URL containing this ‘noindex’ directive. Read more about this update in the official Google announcement on their Webmaster blog.

Duplicate Content

Duplicate content refers to a block of content that either closely resembles or completel matches other content. There may be an instance where duplicate content is convenient for SEM efforts, email nurturing, unique campaigns, etc., but your web page or website will still receive a penalty. If you absolutely need to do so, be sure to use canonical tags on every page. Canonical tags warn search engine crawlers of duplicate content and signals which page is the source.

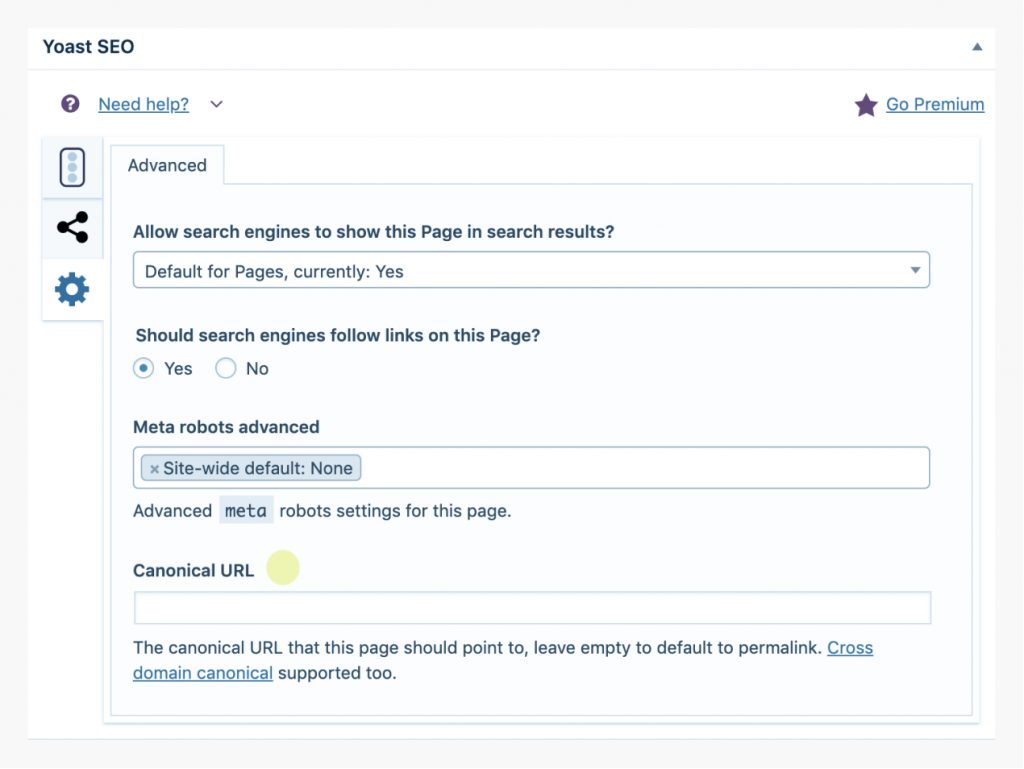

To add a canonical tag in the Yoast SEO plugin in WordPress, look through your site and record any instance of content similarities. Enter a page in WordPress you’d like to recognize as duplicated content and scroll all the way down to the plugin. Select the Advanced tab to paste the URL of the original content source under “Canonical URL”.

You may receive an additional penalty for duplicate content in the form of multiple domains. For example, if you operate a www website and a similar or identical non-www website (or the other way around), search engines may penalize you for duplicate content. The indices are more aware of these scenarios, as such, they’ve reduced the impact of the penalties, but there is still a negative impact. Make sure to enforce one version of a domain.

To do so, edit and enter the following code into the .htaccess file:

RewriteEngine On

RewriteCond %{HTTP_HOST} ^www.yourdomain.com [NC]

RewriteRule ^(.*)$ http://yourdomain.com/$1 [L,R=301]

If you’re working with an older website, chances are it uses PHP. This may lead one or many of the website’s links a /index.php version with duplicate content. In this case, you’d need to redirect the /index.php version to the original version of the page. To do this and avoid any similar errors throughout the site, edit and enter the following code into the .htaccess file:

RewriteEngine on

RewriteCond %{THE_REQUEST} ^[A-Z]{3,9}\/.*index\.php\HTTP/

RewriteRule ^(.*)index\.php$ /$1 [R=301,L]

Mobile-First Approach

We’re living in a mobile-first culture, which means your digital marketing needs to be mobile-first, too.

Mobile Indexing

Mobile-first indexing means that search results will provide websites that are mobile-friendly (meaning load speed, touch points, and functionality) as opposed to desktop-only websites. Desktop-focused websites still have a higher percentage of visits, so the key here is to make sure your website is optimized on desktop while also being responsive on mobile. Head over to Google for best practices when building and/or optimizing a mobile site.

Why You Should Know About AMP

The Accelerated Mobile Pages Project (AMP) enables the creation of websites that are attractive, fast, and high-performing across all devices.

Google is a supporter of AMP, and anything Google promotes will impact search rankings and digital visibility. The only potential downside of AMP is that it generates an AMP link when copied and shared. The unfamiliar presentation may be confusing the users unaware of AMP.

- You’ll need two versions of all article pages–the original version and an AMP version.

- AMP doesn’t naturally support third-party JavaScript. Because of this, you’ll need to overhaul webpages that include elements like lead forms and on-page comments.

- You may need to rewrite your site template to support all AMP restrictions.

- Validate your AMP pages. You can do this with a developer tool built into Chrome.

Clean URLS

Just like the rest of your website, your URLs need to be user-friendly. Avoid characters such as %?& and others. Clean URLs have no special characters, have consistent separation (think dashes vs. underscores), and provide a chance to utilize keywords.

In Conclusion

Don’t worry if you’re a little confused. Learning about technical SEO can either feel like riding a bike or reading a foreign language. Either way, understanding and implementing technical SEO is worth the effort and will take your skills and website to the next level.

The views included in this article are entirely the work and thoughts of the author, and may not always reflect the views and opinions of Regex SEO.

October 9, 2020

Very Informative post. Thanks for sharing your knowledge with us.

February 7, 2021

Thanks for informing me about technical SEO. I am glad that I found your article. Keep posting such informational articles in the future too.

Regards

Sunny